This machine is for GPU-accelerated scientifique computations of university members. Each GPU can be scheduled to jobs separately. SLURM is used as job scheduler. Operating system is Linux/Ubuntu. Connection and data transfer is possible via ssh (secure shell).

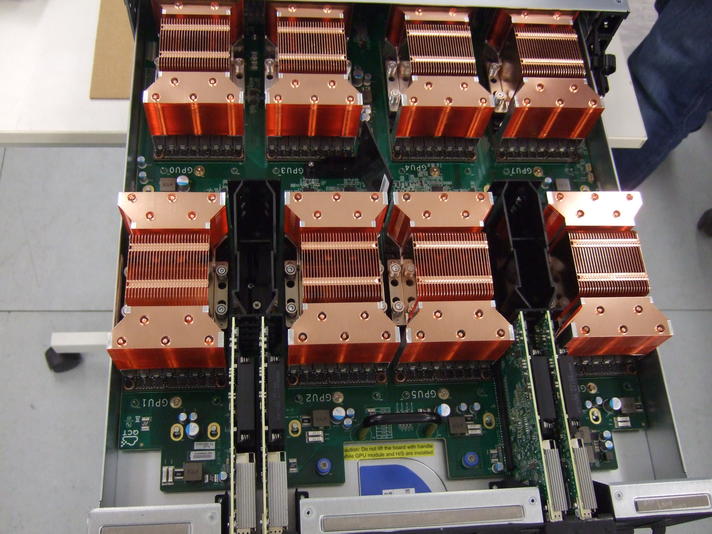

Node: 2x E5-2698 v4 @ 2.20GHz, 512 GB RAM, RAID 7TB GPUs: 8x Tesla V100-SXM2-32GB (each 15.7 SP-TFLOPs) via NVLink Software: Ubuntu 20.04 LTS, Slurm 21.08, GCC-7 to 11, CUDA 12.3 (Feb24) NetStorage: 105TB NVMe via Infiniband 100Gb/s (ab 2022-06)

Access is possible after login using ssh to gpu18.urz.uni-magdeburg.de via intra-uni-network only. An account can be created on formless inquiry to the hpc-admins. Please add information about the project name and its duration (maximum estimated), how much connected GPUs (mostly we expect a single GPU), GPU-memory (max 32GB/GPU), main-Memory and Disk-Space is needed at maximum for each class of jobs you may have. This data will be used to adjust the slurm jobsystem to different needs for best usage i.e. adapting the job queues for different job sizes. Students need a permission (formless email) from its tutor which must be an employee of the university. Please use other smaller GPU-systems if your jobs do not really need 32GB GPU memory to leave the GPUs for users which want that huge GPU-memory and have no other choices (p.e. t100 gpu-nodes). The GPU-memory and its speed is the most expensive part of that kind of GPU-machines. Also note, that the GPU machine though to have huge main memory (512GB), but if that must be distributed to 8 jobs on 8 GPUs only 64 GB memory is left for each users job, which is only a factor of 2 to the GPU-memory. That means you dont have much space to buffer deep-learning data to memory feeding the GPU. If you want more main memory, there must be other users which are satisfied with less than 64GB main memory. Also there is only 7TB SSD-storage for the 8 GPUs. We try to expand this clear bottleneck 2020/2021 by EDR-infiniband storage if we get money for it. Please always be careful in allocating CPUs and memory to avoid conflicts with other jobs. Check scaling before using multiple GPUs for one job. Sharing a single GPU for multiple jobs is not enabled by default to avoid out-of-memory-conflicts for GPU memory (which may crash the GPU, please report your experiences to us). Operating system is Linux Ubuntu. CPU-intense preparation of jobs should not be done on the GPU node. Please use the hpc18 system for it. This machine is not suited for work with personal data. That means there is no encryption and failed disks will be replaced without any possibility to destroy data on this disk before giving it back to a third party due to hardware warranty terms.

#!/bin/bash # history: 2019-09-22, 2021-02-04 #SBATCH -J jobname1 # please replase by short unique self speaking identifier #SBATCH -N 1 # number of nodes, we only have one #SBATCH --gres=gpu:v100:1 # type:number of GPU cards, since 2021-02-03 #SBATCH --mem-per-cpu=4000 # main MB per task? max. 500GB/80=6GB #SBATCH --ntasks-per-node 1 # bigger for mpi-tasks (max 40) #SBATCH --cpus-per-task 5 # max 10/GPU CPU-threads needed (physcores*2) #SBATCH --time 0:59:00 # set 0h59min walltime . /usr/local/bin/slurmProlog.sh # output slurm settings, debugging # please reduce memory usage by using ulimit -v .... # the nvidia-libs do allocate about 2GB ca. 12% (?) of allocable CPU-mem module load cuda # latest cuda, or use cuda/10.0 cuda/10.1 cuda/11.2 echo "debug: CUDA_ROOT=$CUDA_ROOT" nvcc -I $CUDA_ROOT/samples/common/inc -lcublas -o simpleCUBLAS \ $CUDA_ROOT/samples/7_CUDALibraries/simpleCUBLAS/simpleCUBLAS.cpp # srun is needed to make correct GPU allocation via cgroups: srun ./simpleCUBLAS -device=0 # replace by user-program . /usr/local/bin/slurmEpilog.sh # cleanup

#!/bin/bash # last-update: 2019-11-05, 2021-02-03 #SBATCH -J tfjobname2 # please replase by short unique self speaking identifier ###SBATCH -N 1 # number of nodes, we only have one #SBATCH --gres=gpu:v100:1 # type:number of GPU cards #SBATCH --mem-per-cpu=4000 # main MB per task? max. 500GB/80=6GB #SBATCH --ntasks-per-node 1 # bigger for mpi-tasks #SBATCH --cpus-per-task 10 # 10 CPU-threads needed (physcores*2) #SBATCH --time 1:59:00 # set 0h59min walltime # . /usr/local/bin/slurmProlog.sh # output slurm settings, debugging module load cuda # latest cuda, or use cuda/10.0 cuda/10.1 cuda/11.2 echo "debug: CUDA_ROOT=$CUDA_ROOT" testenv=py36tf200 # venv for tensorflow (see lsvirtualenv) # testenv=py38pt # for pyTorch export VIRTUALENVWRAPPER_PYTHON=/usr/bin/python3 export VIRTUALENVWRAPPER_VIRTUALENV=/usr/local/bin/virtualenv export WORKON_HOME=/opt/pythonenv/.virtualenvs source /usr/local/bin/virtualenvwrapper.sh workon $testenv # "deactivate" to leave "workon ..." cd /scratch/$USER/; echo "pwd=$(pwd)"; python -V # 3.6.9 2019-10-17 srun python3 $WORKON_HOME/$testenv/samples/minimal_tf.py >slurm-$SLURM_JOBID.pyout 2>&1 deactivate # workoff :) . /usr/local/bin/slurmEpilog.sh # cleanup

sbatch jobscript # send jobfile to job manager Slurm, will be started later squeue -l # show Slurm job list ToDo: show number of allocated GPUs squeue_all # more job list information scancel -u $USER # kill all my jobs (or use optional JobId)

salloc --gres=gpu:v100:1 -n1 -c2 bash # manual tests on bash console, 1task,2cores dflt.maxtime=2h # followed by: srun -u /opt/test/simpleCUBLAS -device=0

History:

2019-01-30 installation + first GPU-test-users 2019-01-30 ssh-access through ssh gpu18.urz.uni-magdeburg.de (intranet only) 2019-03-20 slurm 15.08.7 installed (from ubuntu reposities) 2019-04-08 fixed typo in dns-nameservers (network was partly slow) 2019-06-12 server room power loss due to short (de: Kurzschluss) 2019-08-08 config central kerberos-passwords (university) 2019-08-12 slurm 17.11.12 installed (+dbd for single GPU allocation) 2019-09-22 single GPU-allocation by slurm, old: node allocation 2019-10-29 reboot to get dead nvidia6 running (slurm problem) 2020-01-14 install g++-5 g++-6 g++-7 g++-9 (old: g++-8) 2020-03-11 reboot (nfs4 partly hanging, some non-accessible files, ca. 40 zombies) 2020-03-20 nfs-server overload errors, nfs4 switched to nfs3 testwise 2020-10-05 07:20 system SSD 480GB defect, system down 2020-10-08 SSD replaced, system restore from system backup (2019-11) 2020-10-12 system restored from backup and up, users allowed 2020-10-28 13:55 -crash- uncorrectable memory error 2020-11-23 slurm Fair-Scheduling configured 2021-01-19 gcc-7.5 as default gcc (old gcc-5) 2021-02-02 new slurm gres syntax 2021-02-03 cuda-11.2 installed, plz use "module load cuda" # or cuda/11.2 2021-03-11 quota activated (try "quota -l") 2021-08-09 remote shutdown tests for 2021-08-16 2021-08-16 planned maintenance, no clima, 12h down time 2021-11-21 /nfs_t100 disabled, t100 will be powered off 2021-11-22 upgrade OS from ubuntu-16 to ubuntu-18, /nfs1 broken (nfs4) 2021-11-29 upgrade to DGX-OS 5, ubuntu 20.4. nvidia-470.82 CUDA 11.4 2021-12-01 fix /nfs1 (nfs3 permanently), python, pip 2022-05-16 downtime due to slurm security update 2022-06-14 maintenance, infiniband driver installation problems 2023-10-26 13:36:57 reboot (cuda-inst), /scratch2 unavailable, 15:30 fix 2024-02-21 driver-535 cuda-12.3 installed (thx to FIN-IKS A.K.) +reboot

NVRM: RmInitAdapter failed! (0x24:0x65:1070) NVRM: rm_init_adapter failed for device bearing minor number 6soft reset per nvidia-smi is not possible (wrong reported syntax errors!), linux reboot helps